The test phase does not end once the product is in front of the watchful public’s eyes. To ensure a correct and continuous development of the chatbot, it is essential to keep testing even after the release. In this article we will see the methods and the tools.

A well-structured and engaging UX in chatbots can increase the retention rate by up to 70%.

Chatbot Magazine

There are two macro-categories of tests that are commonly run after the release. We are talking about A/B testing and performance testing (security and speed). You can also choose a test that is designed specifically for your bot.

A/B Testing

This type of test is mainly useful to understand which part of the flow converts more users and it is carried out by comparing two ore more variants of the same part. It involves testing blocks frequencies, such as different onboarding scenarios or purchase flow. Thanks to this test, the User Experience improves and, consequently, the abandonment rate decreases.

This test is a way to experiment different features of the chatbot with random tests that allow you to collect data and choose which variant to use. It can be about a conversation aspect or a visual factor.

Conversational aspects

As for the conversational flow, it is important to test the first message, the welcome message: will it be a formal message? Will there be an emoji? The first contact determines the engagement of the user, so it is strictly related to the conversation. If the user had already used the chatbot in the past, the collected data are essential to carry on an impactful, non-repetitive and personalized conversation. If it is the first contact between the chatbot and the user, it is important to grasp the salient characteristics from the first messages. Based on this, for example, the formality of the language can be set to improve the engagement.

We are instinctively led to interact more with people who are similar to us, so the answers of the bot and the language it uses will have a strong impact on the conversion.

The metric to be measured in the A/B test will be the ROE (Return On Engagement).

Visual Aspect

The design is as important as the language. An A/B test can be about the frame or button’s color.

At the moment there isn’t any in-depth study on which of the two factors (language or visual) have a greater impact.

How to run an A/B Test

The step to run a chatbot A/B test are:

- Choose a platform to conduct the A/B testing

- Analyze the chatbot funnel and create a list of factors (visual and language) that you want to test

- Gather as much (in scope, of course) data as possible for the following analysis

- Choose, based on data, the best variant or incorporate new sections into the flow.

Creating an A/B Test for the chatbot is not easy, indeed it requires a big effort. In AppQuality we have an integrated approach: Test Automation together with the Crowdtesting. By combining the two methodologies, you would obtain a higher coverage than standard tests.

With Crowdtesting, we select some of the real users of the chatbot to analyze the UX in a real contest. Also, by involving a UX Researcher, we free you from the data collection and analysis phase.

We talked about the training of a chatbot in this article: Use case: the training of a banking Chatbot.

Performance test: speed and security

What do we mean by speed in the chatbots? The immediacy. It is one of the main advantages of the chatbot, so it must be able to respond immediately. If the speed is an essential requirement, the speed test must be planned regularly.

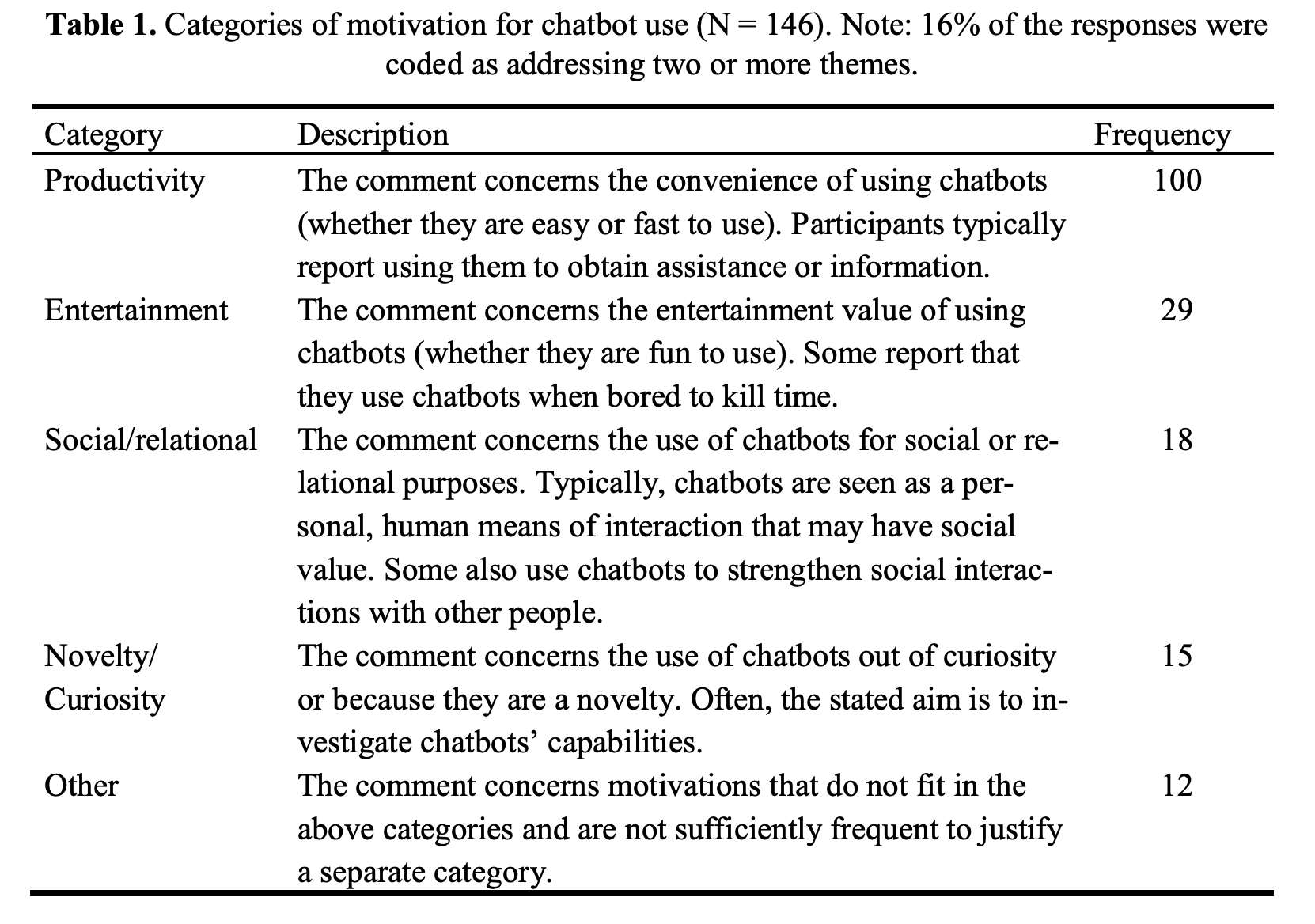

In fact, according to a study of the Oslo University, 42% of the participants said that the ease of use, the speed and the convenience are the main reasons to use a chatbot.

Like any technology, a downside is the security risk.

The more a chatbot seems like a human, the riskier it can be. The influence of the personification and the interactivity can lead the human to open on sensitive topics such as stressors (Sannon, S., Stoll, B., DiFranzo, D., Jung, M., Bazarova, N.N).

Threats includes malware attacks and DDoS. When an attack is targeted at a specific company, it can shut out the owners and demand a ransom. The alternative? The hacker can threaten the owners to expose the users’ data collected by the chatbot. Other threats are:

- Identity theft

- Ransomware

- Malware

- Data theft

- Data alteration

- Bot re-purposing

- Phishing

- Whaling

We must distinguish between threats and vulnerabilities, i.e. those flaws in the system that offer the access to the system to the cybercriminals, to compromise the security. This is caused by:

- Weak coding

- Poor guarantees

- Unencrypted communications

- Back-door access

- Lack of HTTP protocol

- Absence of security protocols for employees

- Errors on the platform

All the systems have weaknesses, but you should be the one finding them, not the cybercriminals.

How to test the chatbot security

There are some security tests that can be run to improve the chatbot integrity. Some of these are:

- Penetration testing: a method to test the vulnerability of a system or a technology. It is also known as “ethical hacking” and it is manually run by cybersecurity experts or automated.

- API security testing: there are different tools available to test the API integrity. Experts in security have access to advanced software that allows them to identify vulnerabilities that the common programs would not find.

- Comprehensive UX testing: a well-designed technology is often the result of a good User Experience. When testing the chatbot security it is also appropriate to test the UX as a whole.

Other approaches to the chatbot test after the release

Depending on the type of training that has been done, the topics on which it responds and the technologies used, the chatbot may need different types of test. An ad hoc test can be designed for your bot (even vocal) together with our digital quality consultant.

Sources (other than those mentioned in the article):

Sannon, S., Stoll, B., DiFranzo, D., Jung, M., Bazarova, N.N.: How personification and interactivity influence stress-related disclosures to conversational agents. In: Companion of the 2018 ACM Conference on Computer Supported Cooperative Work and Social Computing, pp. 285–288. ACM, New York (2018)

Vrizlynn L. L. Thing, Morris Sloman, and Naranker Dulay: A Survey of Bots Used for Distributed Denial of Service Attacks. Department of Computing, Imperial College London, 180 Queen's Gate, SW7 2AZ, London, United Kingdom

Nicole Radziwill and Morgan Benton: Evaluating Quality of Chatbots and Intelligent Conversational Agents

Bansal H, Khan R. A review paper on human computer interaction. Int. J. Adv. Res. Comput. Sci. Softw. Eng. 2018;8:53